The Huemer Graph

The latest reply from Mike Huemer on the ethical treatment of animals, this time with a cool graph.

Bryan Caplan posted this further comment on animal welfare arguments on his blog.

I didn’t have time to address this earlier (partly because I was traveling for the talk that, coincidentally, Bryan Caplan invited me to give at GMU, on an unrelated topic). I have a few comments now.

My main reactions:

I. The argument from insects has too many controversial assumptions to be useful. We should instead look more directly at Bryan’s theoretical account of how factory farming could be acceptable.

II. That theory is ad hoc and lacks intrinsic intuitive or theoretical plausibility.

III. There are much more natural theories, which don’t support factory farming.

I.

To elaborate on (I), it looks like (after the explanations in his latest post), Bryan is assuming:

a. Insects feel pain that is qualitatively like the suffering that, e.g., cows on factory farms feel.

b. If (a) is true, it is still permissible to kill bugs indiscriminately, e.g., we don’t even have good reason to reduce our driving by 10%.

(a) and (b) are too controversial to be good starting points to try to figure out other controversial animal ethics issues. I and (I think) most others reject (a); I also think (b) is very non-obvious (especially to animal welfare advocates). Finally, note that most animal welfare advocates claim that factory farming is wrong because of the great suffering of animals on factory farms (not just because of the killing of the animals), which is mostly due to the conditions in which they are raised. Bugs aren’t raised in such conditions, and the amount of pain a bug would endure upon being hit by a car (if it has any pain at all) might be less than the pain it would normally endure from a natural death. So I think Bryan would also have to use assumption (c):

c. If factory farming is wrong, it’s wrong because it’s wrong to painfully kill sentient beings, not, e.g., because it’s wrong to raise them in conditions of almost constant suffering, nor because it’s wrong to create beings with net negative utility, etc.

So to figure out anything about factory farming using Bryan’s approach, we’d first have to settle disputes about (a), (b), and (c), none of which are obvious, and none of which is really likely to be settled. So this is not promising.

II.

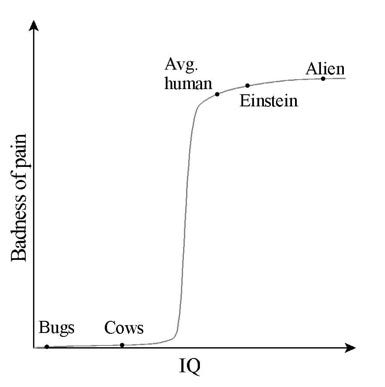

What would be more promising? Let’s just look at Bryan’s account of the badness of pain and suffering. (Note: I include all forms of suffering as bad, not merely sensory pain.) I think his view must be something like what the graph below depicts.

As your intelligence increases, the moral badness of your pain increases. But it’s a non-linear function. In particular:

i. The graph starts out almost horizontal. But somewhere between the intelligence of a typical cow and that of a typical human, the graph takes a sharp upturn, soaring up about a million times higher than where it was for the cow IQ. This is required in order to say that the pain of billions of farm animals is unimportant, and yet also claim that similar pain for (a much smaller number of) humans is very important.

ii. But then the graph very quickly turns almost horizontal again. This is required in order to make it so that the interests of a very smart human, such as Albert Einstein, don’t wind up being vastly more important than those of the rest of us. Also, so that even smarter aliens can’t inflict great pain on us for the sake of minor amusements for themselves.

Sure, this is a logically possible (not contradictory) view. But it is very odd and (to me) hard to believe. It isn’t obvious to begin with why IQ makes a difference to the badness of pain. But assuming it does, features (i) and (ii) above are very odd. Is there any explanation of either of these things? Can someone even think of a possible explanation? If you just think about this theory on its own (without considering, for example, how it impacts your own interests or what it implies about your own behavior), would anyone have thought this was how it worked? Would anyone find this intuitively obvious? As a famous ethical intuiter, I must say that this doesn’t strike me as intuitive at all.

Now, that graph might be a fair account of most people’s implicit attitudes. But what is the best explanation for that:

1) That we have directly intuited the brute, unexplained moral facts that the above graph depicts, or

2) That we are biased?

I think we can know that explanation (1) is not the case. We can know that because we can just think about the major claims in this theory, and see if they’re self-evident. They aren’t.

To me, explanation (2) thrusts itself forward. How convenient that this drastic upturn in moral significance occurs after the IQ level of all the animals we like the taste of, but before the IQ level of any of us. Good thing the inexplicable upturn doesn’t occur between bug-IQ and cow-IQ (or even earlier). Good thing it goes up by a factor of a million before reaching human IQ, and not just a factor of a hundred or a thousand, because otherwise we’d have to modify our behavior anyway.

And how convenient again that the moral significance suddenly levels off again. Good thing it doesn’t just keep going up, because then smart people or even smarter aliens would be able to discount our suffering in the same way that we discount the suffering of all the creatures whose suffering we profit from.

I have no explanation for why features (i) and (ii) would hold, but I can easily explain why a human would want to claim that they do.

Imagine a person living in the slavery era, who claims that the moral significance of a person’s well-being is inversely related to their skin pigmentation (this is a brute moral fact that you just have to see intuitively), and that the graph of moral significance as a function of skin pigmentation takes a sudden, drastic drop just after the pigmentation level of a suntanned European but before that of a typical mulatto. This is a logically consistent theory. It also has the same theoretical oddness of Bryan’s theory (“Why would it work like that?”) and a similar air of rationalizing bias or self-interest (“How convenient that the inexplicable downturn occurs after the level of the people you like and before the level of the people you profit from enslaving.”)

III.

A more natural view would be, e.g., that the graph of “pain badness” versus IQ would just be a line. Or maybe a simple concave or convex curve. But then we wouldn’t be able to just carry on doing what is most convenient and enjoyable for us.

I mentioned, also, that the moral significance of IQ was not obvious to me. But here is a much more plausible theory that is in the same neighborhood. Degree of cognitive sophistication matters to the badness of pain, because:

1. There are degrees of consciousness (or self-awareness).

2. The more conscious a pain is, the worse it is. E.g., if you can divert your attention from a pain that you’re having, it becomes less bad. If there could be a completely unconscious pain, it wouldn’t be bad at all.

3. The creatures we think of as less intelligent are also, in general, less conscious. That is, all their mental states have a low level of consciousness. (Perhaps bugs are completely non-conscious.)

I think this theory is much more believable and less ad hoc than Bryan’s theory. Point 2 strikes me as independently intuitive (unlike the brute declaration that IQ matters to badness of pain). Points 1 and 3 strike me as reasonable, and while I wouldn’t say they are obviously correct, I also don’t think there is anything odd or puzzling about them. This theory does not look like it was just designed to give us the moral results that are convenient for us.

Of course, the “cost” is that this theory does not in fact give us the moral results that are most convenient for us. You can reasonably hold that the pain of a typical cow is less bad than the pain of a typical person, because maybe cow pains are less conscious than typical human pains. (Btw, the pain of an infant would also be less intrinsically bad than that of an adult. However, infants are also easier to hurt; also, excessive infant pain might cause lasting psychological damage, etc. So take that into account before slapping your baby.) But it just isn’t plausible that the difference in level of consciousness is so great that the human pain is a million times worse than the (otherwise similar) cow pain.

The post appeared first on Econlib.